Angular: Enhancing Error Handling with Automatic Request Retries (Backoff Strategy)

Accessing data from a backend is an essential task for most frontend applications, especially Single Page Applications like React, Next, Angular, Vue, Flutter, and many others, where all dynamic content can be loaded from a server.

Under ideal conditions, requests made to the backend occur seamlessly, and the expected result is returned. This is the scenario in which we spend most of our time developing, testing, and validating applications, which takes place in a controlled environment.

However, it is crucial to bear in mind that, in the real-world usage of a web application, extremely unforeseen situations can arise, emphasizing the need to be prepared to handle the unexpected.

Even with a well-crafted development and testing infrastructure, simulating and testing all possible failure scenarios that may occur during the use of a production application is a challenging task.

In a real-world usage context, many things can change.

Your web application may be rendered in various browsers, in numerous versions. It can run on different devices with different operating systems. It will likely be used on devices with unstable internet connections or even on low-performance hardware. These factors can result in connection drops, and if such a drop occurs during an application request, problems will arise.

Depending on how your application is built, this can impact the application's state, causing the user to experience errors when submitting a form or making a purchase on your site or any other interaction that requires a server request.

Using the retry() operator

Thanks to the RxJS library, we have an operator built precisely to address this need. We can use the 'retry' operator to 'resubscribe' the observable returned by HttpClient and automatically resend the last failed request.

The significant advantage is that this operator is easily configurable and allows us to create true strategies (or policies, if you prefer to call them that) for retrying failed requests.

Here's a simple example of how the implementation looks like.

import { HttpClient } from '@angular/common/http';

import { Component, OnInit } from '@angular/core';

import { retry } from 'rxjs';

@Component({

selector: 'app-root',

templateUrl: './app.component.html',

})

export class AppComponent implements OnInit {

constructor(public httpClient: HttpClient) { }

ngOnInit(): void {

this.httpClient

.get('http://localhost:3000/books')

.pipe(retry(3))

.subscribe((res) => {

console.log('Successfull response: ', res);

});

}

}

To test this implementation, we created a simple server with Express.js containing a '/books' route that randomly returns an HTTP 500 error:

const express = require('express');

const cors = require('cors');

const app = express();

const port = 3000;

app.use(cors({

origin: 'http://localhost:4200'

}));

app.get('/books', (req, res) => {

const randomNum = Math.random();

if (randomNum > 0.5) {

res.status(500).send('Internal Server Error');

} else {

res.json({ message: 'Hello world!' });

}

});

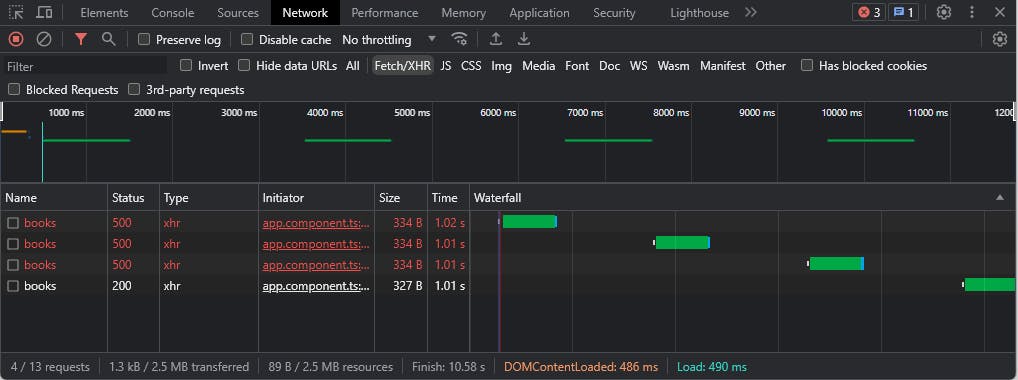

And this is how it works in practice:

In the example above, the first request encountered an error. So, the retry kicked in twice: the first retry resulted in an error, triggering a second retry, which was successful, eliminating the need for further retries. Quite straightforward, isn't it?

Additionally, we can include the 'catchError' operator to perform some handling when all retry attempts fail:

ngOnInit(): void {

this.httpClient

.get('http://localhost:3000/books')

.pipe(retry(3), catchError(this.handleError))

.subscribe((res) => {

console.log('Successfull response: ', res);

});

}

private handleError(err: any): Observable<any> {

console.log('All retries attempts failed:', err);

return throwError(() => new Error(err));

}

Adding a delay between retries:

Now that we have the basics working, we can enhance our implementation by including a time interval between requests. This strategy is useful to avoid overloading the server with multiple simultaneous calls. By adding a waiting time, you allow the environment to stabilize before receiving the next request, reducing the likelihood of consecutive failures.

To implement the delay logic, we just need to replace the parameter passed to the 'retry' operator with an object of the RetryConfig interface. In this object, we specify the number of attempts and the delay interval in milliseconds.

private getWithSimpleRetry(): void {

this.httpClient

.get('http://localhost:3000/books')

.pipe(

retry({ count: 3, delay: 1000 }),

catchError(this.handleError)

).subscribe((res) => {

console.log('Successfull response: ', res);

});

}

You can observe in the 'Waterfall' column of the DevTools a blank space between the retry requests. That represents our 2-second delay in practice!

Using Progressive Delay and Exponential Delay (Backoff Strategy)

The waiting period between retry attempts is commonly known as a backoff strategy (or backoff policy). We'll use this terminology from now on.

In the previous example, we waited the same interval of time between each request, meaning a fixed backoff time.

A fixed backoff time may be suitable for scenarios where failures are rare, retry attempts are generally successful, and there are no significant congestion issues.

However, when facing recurring failures, congestion, or resource limitations, there are other more interesting approaches that allow for a better response and system recovery, such as linear backoff and exponential backoff strategies.

Let's take a closer look at how their implementations work:

Linear Backoff

In this approach, the delay between attempts increases linearly with each retry. To achieve this, we need to multiply the index of the current attempt by an initial delay value.

In our example below, the initial delay is 1000 ms (1 second), and the attempt index starts at 1, so the delays will be: 1 1000 ms, 2 1000 ms, 3 1000 ms, 4 1000 ms... and so on.

private getWithLinearBackoff(): void {

this.httpClient

.get('http://localhost:3000/books')

.pipe(

retry({

count: 3,

delay: (_error: any, retryCount: number) => timer((retryCount) * 1000),

}),

catchError(this.handleError)

).subscribe((res) => {

console.log('Successfull response: ', res);

});

}

In the example above, we utilized the RetryConfig interface of the retry operator. Its delay property can be either a number or a function that returns an observable. This function should have two parameters, error and retryCount, representing the error returned in the retry and the index of that attempt, respectively.

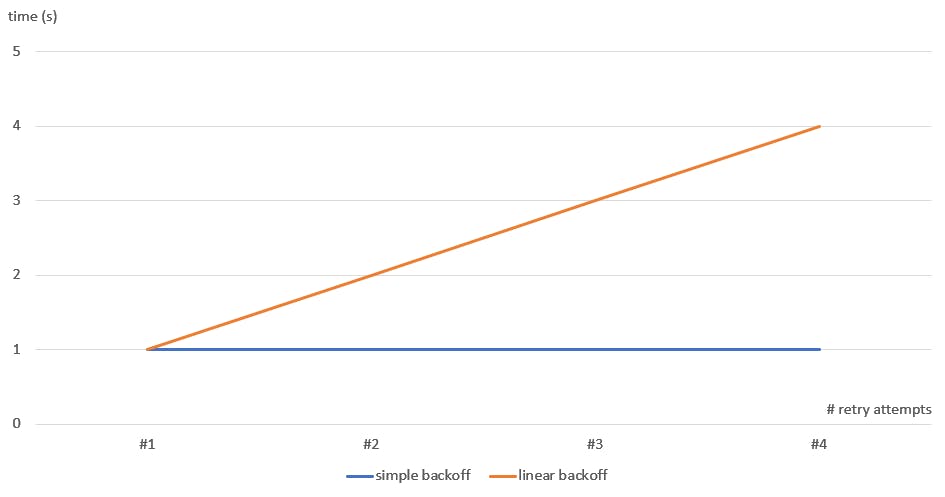

When we compare the delay time of each attempt on a graph, it becomes clear why we call it linear backoff.

This approach is very interesting to address the following situations:

Occasional Failures: If the system occasionally faces temporary failures, and retry attempts have a good chance of success with a new call, linear backoff can be a reasonable choice. In this case, a simple linear increase in waiting time provides a straightforward and efficient approach.

Minimize Total Waiting Time: If the total waiting time is a critical consideration, and prolonged delays are undesirable, linear backoff may be the preferred option. As the waiting time increases linearly, the total waiting time for all attempts is lower compared to other strategies.

Exponential Backoff

We've seen that the linear strategy is simple and easy to implement, but it may not be ideal for all situations. There are scenarios where it is recommended to use more sophisticated backoff strategies, such as exponential backoff.

As the name suggests, in exponential backoff, the interval between attempts increases exponentially with each retry.

For this implementation, we'll again use an initial delay of 1 second. However, this time, the multiplication factor will start at 2 raised to the power of the current attempt index. This way, the delays will be 2^1 1000ms, 2^2 1000ms, 2^3 1000ms, 2^4 1000ms... and so on.

private getWithExponentialBackoff(): void {

this.httpClient

.get('http://localhost:3000/books')

.pipe(

retry({

count: 3,

delay: (_error: any, retryCount: number) => {

const delayTime = Math.pow(2, retryCount) * 1000;

return timer(delayTime);

},

}),

catchError(this.handleError)

).subscribe((res) => {

console.log('Successfull response: ', res);

});

}

Breaking down the function implementation into two more lines to make it easier to understand:

This strategy is suitable for situations such as:

Congestion or Persistent Failures: If the system faces frequent congestion or persistent failures, exponential backoff is more appropriate. Exponentially increasing the waiting time helps deal with these situations, allowing the system to recover more effectively.

Limited Resources: If the infrastructure has limited resources, such as memory, bandwidth, processing power, and the like, exponential backoff can be useful to avoid overloading these resources. The exponential increase in waiting time helps reduce the concentration of simultaneous attempts.

This approach results in behavior quite different from linear backoff and has several advantages, such as greater flexibility for environmental stabilization and reducing congestion, as exponential increase helps avoid a concentration of simultaneous calls. However, it also brings the inevitable disadvantage of a longer total waiting time.

Applying Backoff Strategy Globally

We want to enhance the resilience of our application as a whole, not just for a single call. To achieve this, we need to extend our backoff strategy to all HTTP requests made by the application. To accomplish this goal, we can create an HTTP interceptor. This interceptor will be responsible for incorporating the backoff logic into all requests.

Let's see how to implement this below:

First, we'll create a file named backoff.interceptor.ts with the following code:

import { HttpEvent, HttpHandler, HttpInterceptor, HttpRequest } from "@angular/common/http";

import { Injectable } from "@angular/core";

import { Observable, retry, timer } from "rxjs";

@Injectable()

export class BackoffInterceptor implements HttpInterceptor {

intercept(req: HttpRequest<any>, next: HttpHandler): Observable<HttpEvent<any>> {

return next.handle(req)

.pipe(

retry({

count: 3,

delay: (_error: any, retryCount: number) => {

const delayTime = Math.pow(2, retryCount) * 1000;

return timer(delayTime);

}

})

);

}

}

An interceptor is an Angular feature that allows the interception and processing of HTTP requests and responses. I won't delve into more details about how an interceptor works, as this is a feature that deserves a dedicated publication exclusively for it.

Once the interceptor is created, you just need to register it in the main module of the application (in our case, app.module.ts).

import { NgModule } from '@angular/core';

import { BrowserModule } from '@angular/platform-browser';

import { HTTP_INTERCEPTORS, HttpClientModule } from '@angular/common/http';

import { AppComponent } from './app.component';

import { BackoffInterceptor } from 'src/core/interceptors/backoff.interceptor';

@NgModule({

declarations: [

AppComponent

],

imports: [

BrowserModule,

HttpClientModule

],

providers: [{

provide: HTTP_INTERCEPTORS,

useClass: BackoffInterceptor,

multi: true

}],

bootstrap: [AppComponent]

})

export class AppModule { }

With that done, all requests made within your application will follow the backoff strategy defined in the interceptor.

Assigning the strategy to specific HTTP errors

As you've probably noticed by this point in the article, not all errors are relevant to be handled by a backoff strategy.

Some errors may be critical or indicate unrecoverable problems, such as authentication errors (401), request errors (400), not found (404), among various other error types that may not be suitable for your context. Limiting the backoff strategy to specific error types ensures that we are applying the strategy only to transient or temporary errors, where a new request attempt might be sufficient for success.

Let's then edit our previous code to include a check on the HTTP error code and apply the backoff strategy only to errors that are in the 'retryStatusCodes' list.

import { HttpErrorResponse, HttpEvent, HttpHandler, HttpInterceptor, HttpRequest } from "@angular/common/http";

import { Injectable } from "@angular/core";

import { Observable, retry, throwError, timer } from "rxjs";

@Injectable()

export class BackoffInterceptor implements HttpInterceptor {

retryStatusCodes = [500, 502, 503, 504];

intercept(req: HttpRequest<any>, next: HttpHandler): Observable<HttpEvent<any>> {

return next.handle(req)

.pipe(

retry({

count: 3,

delay: (err: any, retryCount: number) => {

if (err instanceof HttpErrorResponse && this.retryStatusCodes.includes(err.status)) {

const delayTime = Math.pow(2, retryCount) * 1000;

return timer(delayTime);

}

// re-throwing erros that are not eligible for retry

return throwError(() => err);

}

})

);

}

}

In our example, we are limiting the strategy only to errors related to the server or infrastructure. However, it's essential to note that the definition of codes should consider the specifics of your environment or infrastructure.

Regarding the codes used in the example above:

500 Internal Server Error: Unexpected server error during request processing. Typically indicates a server-side problem.

502 Bad Gateway: Often found in gateway or proxy scenarios. Indicates that the server acting as a gateway or proxy received an invalid response from an upstream server.

503 Service Unavailable: Indicates that the server cannot handle the request due to temporary overload or maintenance. This implies that the server is temporarily unable to process the request.

504 Gateway Timeout: This status code indicates that a server acting as a gateway or proxy did not receive a timely response from an upstream server when attempting to fulfill the request.

Bonus: Extracting the code into a custom RXJS operator

Previously, we saw how to implement the retry strategy globally, applying it to all requests in the application. While this approach has several benefits, it may not be the most suitable for some scenarios.

Let's say you want to implement this strategy in a more modular and reusable way, where you can choose exactly which calls will use the strategy and which won't.

For this, let's create a file named retry-backoff.operator.ts with the following implementation:

import { HttpErrorResponse } from '@angular/common/http';

import { timer, throwError } from 'rxjs';

import { retry } from 'rxjs/operators';

const retryStatusCodes = [500, 502, 503, 504];

const maxRetries: number = 3;

const initialDelay: number = 1000

export function retryWithBackoff() {

return (errors: any) => {

return errors.pipe(

retry({

count: maxRetries,

delay: (err: any, retryCount: number) => {

if (err instanceof HttpErrorResponse && retryStatusCodes.includes(err.status)) {

const delayTime = Math.pow(2, retryCount) * initialDelay;

return timer(delayTime);

}

return throwError(() => err);

}

}));

};

}

With that done, you just need to import our new operator and use it to implement the retry logic where you find it necessary, like in this simple GET request:

private getWithSimpleRetry(): void {

this.httpClient

.get('http://localhost:3000/books')

.pipe(

retryWithBackoff(),

catchError(this.handleError)

).subscribe((res) => {

console.log('Successfull response: ', res);

});

}

Or even use it directly in the HTTP Interceptor:

import { HttpErrorResponse, HttpEvent, HttpHandler, HttpInterceptor, HttpRequest } from "@angular/common/http";

import { Injectable } from "@angular/core";

import { Observable, catchError, throwError } from "rxjs";

import { retryWithBackoff } from "../operators/retry-backoff.operator";

@Injectable()

export class BackoffInterceptor implements HttpInterceptor {

intercept(req: HttpRequest<any>, next: HttpHandler): Observable<HttpEvent<any>> {

return next.handle(req)

.pipe(

retryWithBackoff(),

catchError((err: HttpErrorResponse) => {

// Implement your error handling logic here

return throwError(() => err);

})

);

}

}

Conclusion

Implementing an intelligent retry policy to handle temporary failures allows developers to provide users with a more consistent and seamless experience, even in situations of network instability or server overload.

The flexibility offered by Angular for creating custom interceptors enables developers to tailor the retry strategy to the specific needs of the business, making their application more adaptable and efficient.